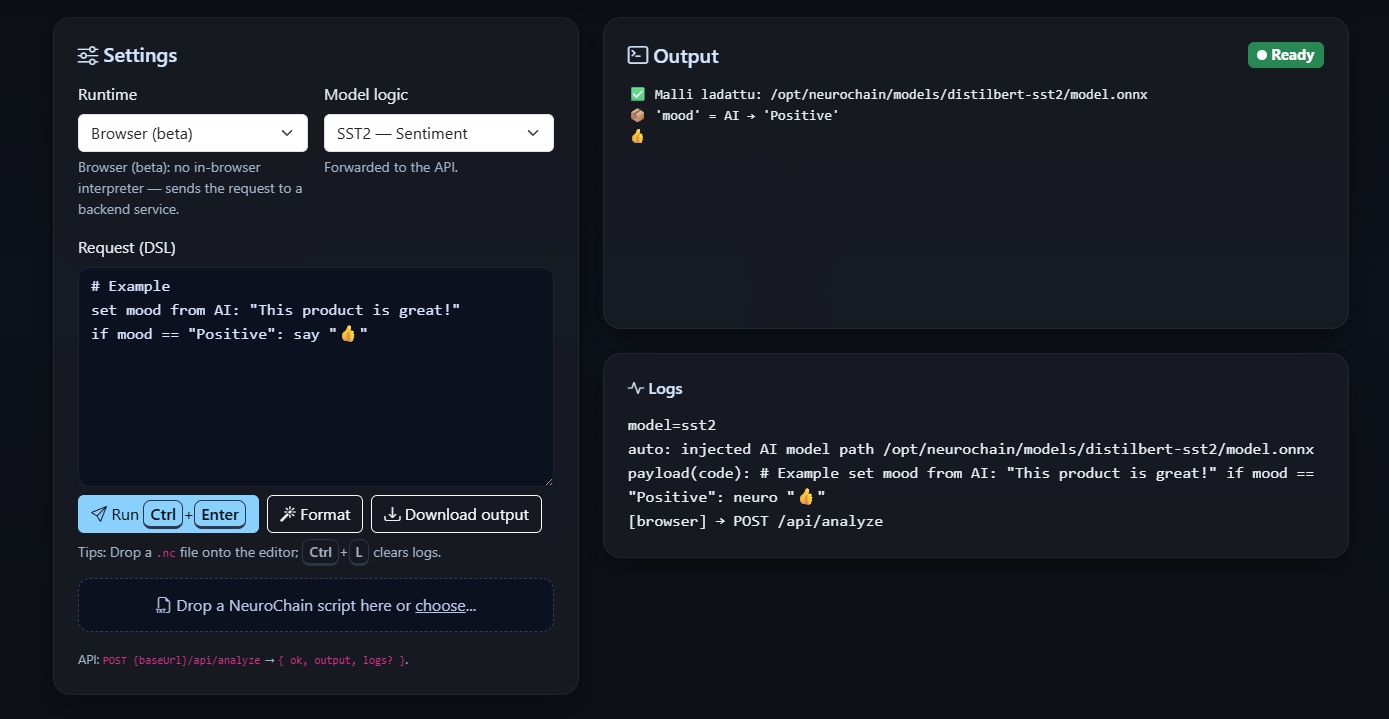

WebUI Demo

Enter NeuroChain DSL commands and run them from your browser. Pick one or many models and see output and logs instantly.

- Model picker: SST2 / Toxic / FactCheck / Intent / MacroIntent

- Multi-model runs (one request per selected model)

- Drag & Drop for

.ncfiles - Local API Base URL support (connect to your own server)

- Download output & keyboard shortcuts